Researchers at the Skolkovo Institute of Science and Technology (Skoltech) in Moscow, Russia have come up with a novel way to interface with a drone via hand movements.

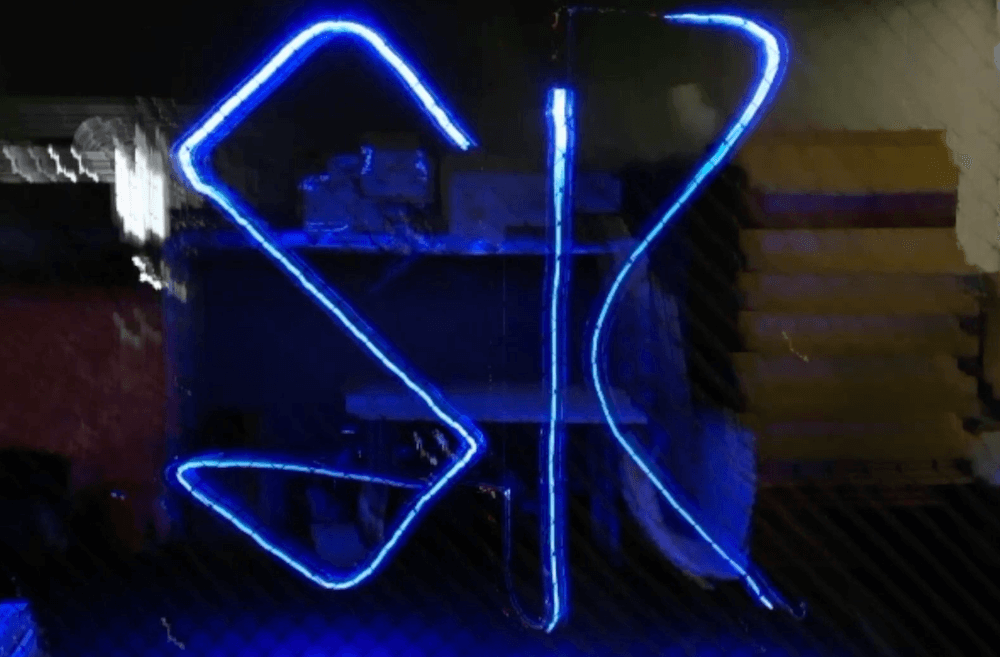

As shown in the video below, the device can be used to create long-exposure light art, though the possibilities for such an intuitive control system could extend to many other applications as well.

In this setup, a small Crazieflie 2.0 quadcopter is operated by a glove-like wearable featuring an Arduino Uno, along with an IMU and flex sensor for user input, and an XBee module for wireless communication. The controller connects to a base station running a machine learning algorithm that matches the user’s gestures to pre-defined letters or pattern, and directs the drone to light paint them.

The team’s full research paper is available here.

No comments:

Post a Comment